Newshub

Choose a sort

Sort

- All

- Blog

- News

- Video

Choose a topic

Topic

- All topics

- Business

- Founders

- Growth

- Investment

- Marketing

- People

- Product

May 22, 2025•4 mins read

Tesonet launches initiative to bring AI tools to schools in Lithuania

April 11, 2025•2 mins read

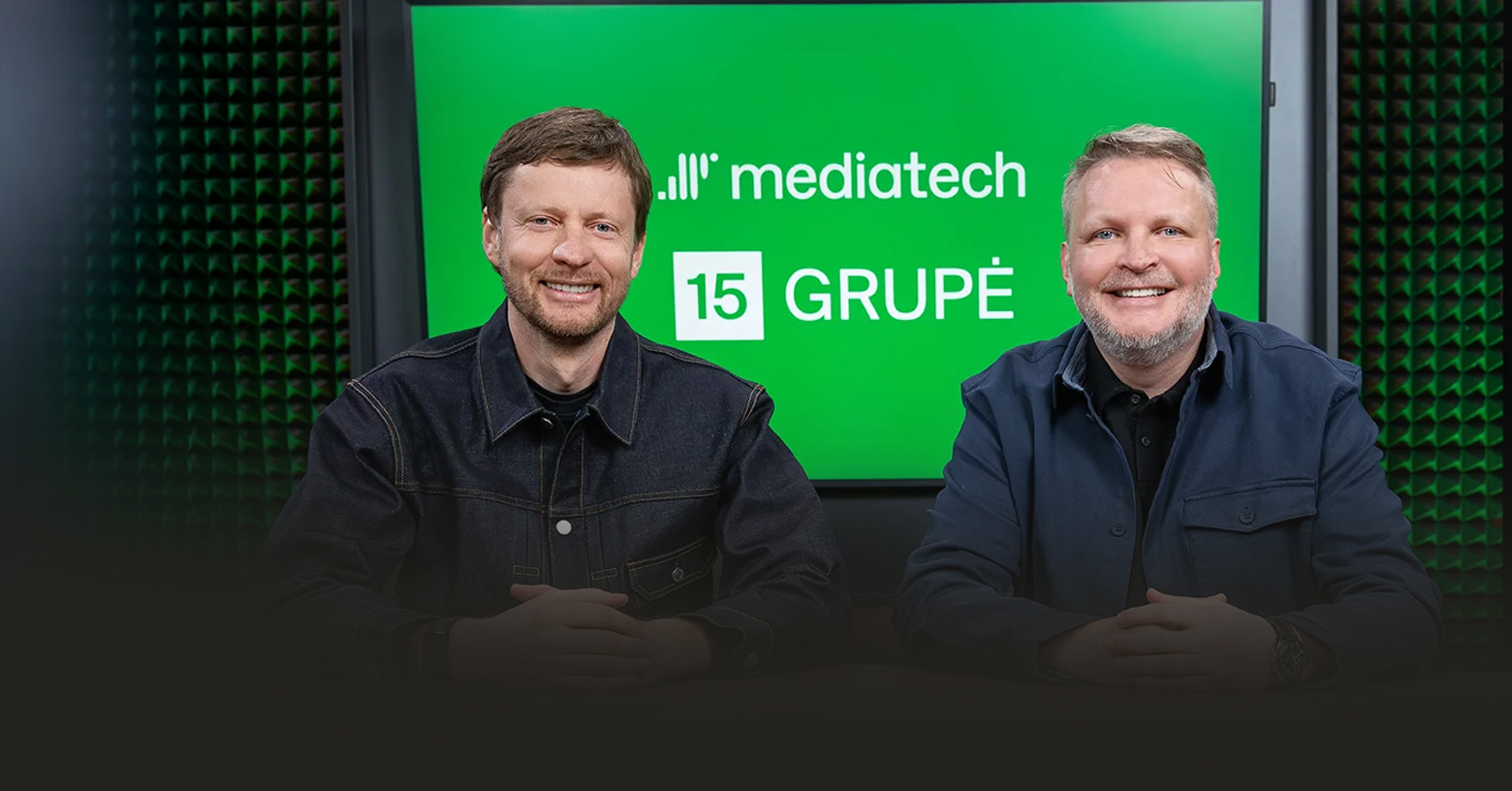

Tesonet portfolio company Mediatech invests in 15min group

March 12, 2025•2 mins read

Tesonet Plans to Build New Basketball Arena in London

February 27, 2025•2 mins read

Tesonet Foundation Launch: Not Just Charity—Strategy

January 15, 2025•5 mins read

nexos.ai emerges from stealth to launch an AI orchestration platform for the enterprise with funding led by Index Ventures

December 30, 2024•8 mins read

AI: Friend, Foe, or CEO? 5 Trends in 2025

Show more